V Quantum Computation

This section discusses the most important quantum-computation algorithms, associated with the names Deutsch and Josza, Shor, and Grover. To get a feeling of algorithms we start with the simple, classical example of adding two numbers.

V.1 Adding numbers

The discussion in Section III implies that quantum computers can achieve, at least, the same tasks as a classical computer. Since this requires reversible logics, we first illustrate this by an example, namely, the computation of the sum of two numbers.

Binary numbers can be added just like decimal numbers, bit by bit starting with the least significant bits, and carrying over bits to the next more significant bit. Consider, e.g., the sum , which in binary code is . We first add the last two bits (i.e., the least significant bits), resulting in . The last bit of this (0) is the last bit of our result, while the first bit needs to be carried over. We then add the first two bits, and to this the carry-on bit, giving . This again results in a carry-on bit. There are no further bits to be processed, so the carry-on bit becomes the most significant bit. Our result now has three bits: 110, which is the same as .

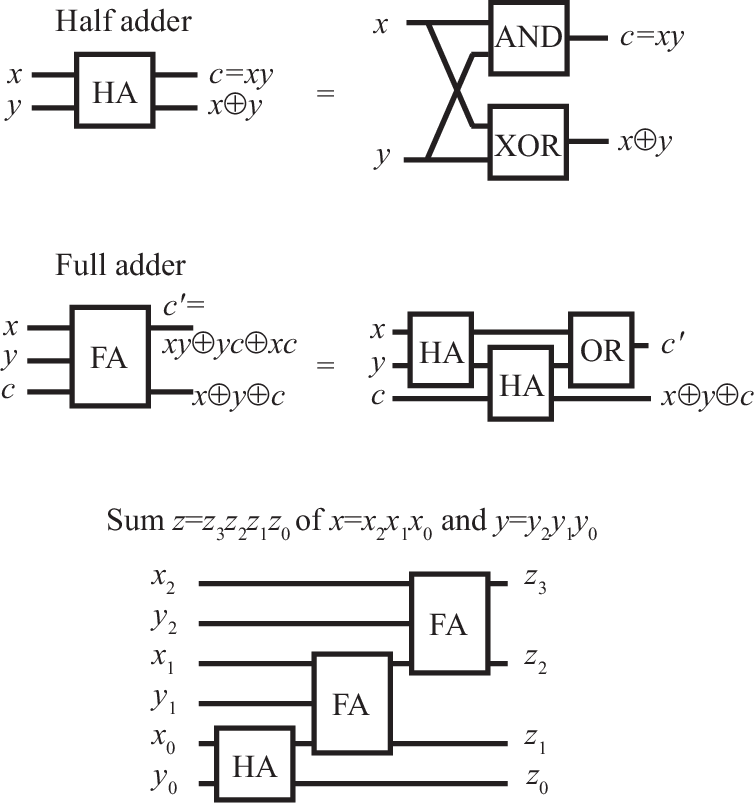

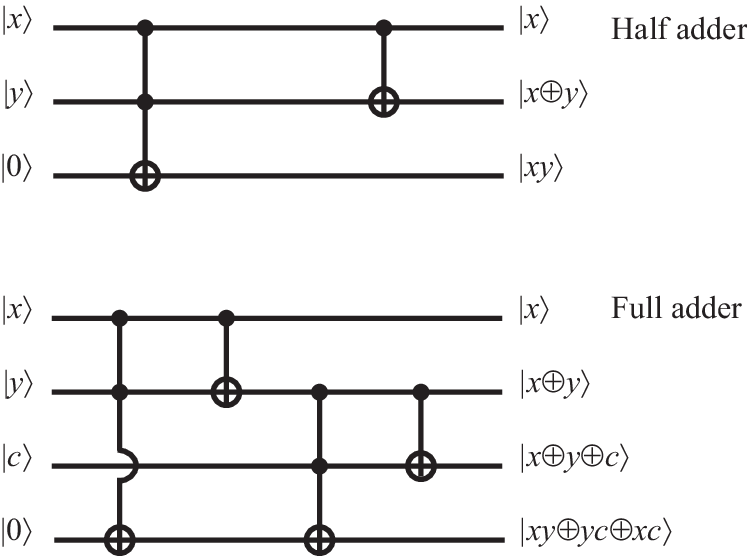

In classical computers, these operations can be carried out using half-adder and full-adder components, which can be realized using elementary binary gates as shown in Fig. 10. Because of , the depicted circuit is irreversible (furthermore, it requires to copy bits). Figure 11 shows that the same operations can also be achieved quantum mechanically using a combination of reversible CNOT and CCNOT gates, which generate additional outputs that can be used to reverse the calculation.

Adding two numbers with reversible gates does not increase the efficiency of the algorithm. This is in contrast to the following examples, which concern true quantum algorithms that solve specific tasks more efficiently than classical algorithms.

V.2 Deutsch-Josza algorithm

Efficient quantum algorithms generally exploit the quantum parallelism to carry out many calculations in one step, which is particularly useful if one is interested in a global property of these calculations. This is nicely illustrated by the Deutsch-Josza algorithm, which allows to establish a specific feature of certain Boolean functions that specifically fall into one of the following two classes: either the function is constant (i.e., the output bit is either always 1, or always 0), or it is balanced (i.e., the output bit is 0 for one half of the input values, and 1 for the remaining half).

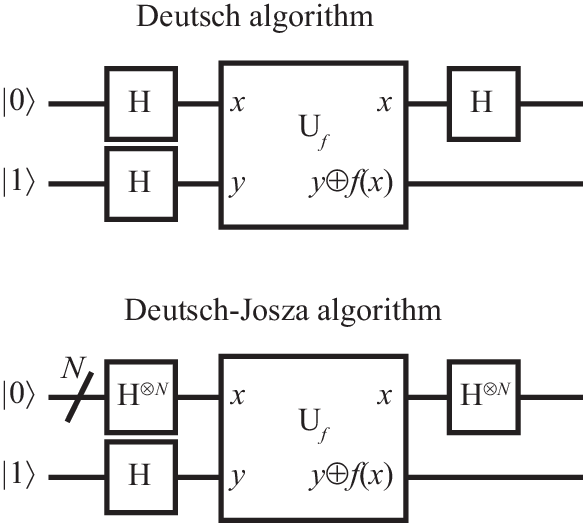

Assume that we are given a function which is guaranteed to be either constant or balanced, but at the outset we do not know to which of the two classes it belongs. Classically, we need at least two function evaluations to find out that a function is balanced—and this is only in the fortunate case that we immediately pick two inputs for which the outputs differ. In order to certify that a function is constant we need function evaluations—that’s because if the function is balanced, we may by chance first pick inputs that all result in the same output. In contrast, using quantum gates, the Deutsch-Josza algorithm, shown in Fig. 12, requires only a single function evaluation to determine whether the function is constant or balanced. (The upper panel of the figure shows the Deutsch algorithm, the special case of a function with single-bit input, which is addressed on worksheet 3.)

Starting from the initial state , Hadamard gates are used to form the superposition

(see also Fig. 6(b)). If , the function gate changes the state of the last qubit into ; for , the state of this qubit remains . Therefore, the function gate introduces factors in front of each basis state , resulting in

In the final step, Hadamard gates are applied to all but the last qubit. Using the result shown in Fig. 6(c), this delivers the final state

Let us now have a look at the amplitude of the state with , which is given by . If is balanced, this amplitude vanishes. On the other hand, if is constant, this amplitude takes the value (depending on whether or ). Therefore, if is constant, a measurement of all qubits will find them all taking the value , while this will never happen if the function is balanced. Remarkably, using quantum parallelism, the distinction of both cases has been achieved with a single operation of the function gate.

A notable ingredient of the Deutsch-Josza is the introduction of conditional phase factors (such as ) in front of each of the computational basis states. This strategy is also at the core of the practically more important algorithms discussed next.

V.3 Grover’s quantum search algorithm

Grover’s quantum search algorithm exploits quantum parallelism to speed up the search for solutions among a large set of candidate solutions. A prime example is the search for entries in a database which match to a given key. Let us enumerate all entries by an integer index , and assume for simplicity that the size of the database is . The entries matching the key can then be characterized by an oracle function , which is a Boolean function that returns if entry matches to the key; otherwise, .

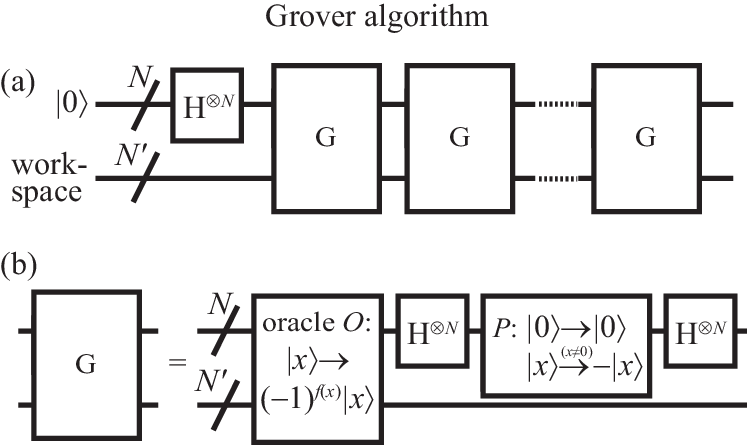

Assume that there are entries matching the key. Classically, we need to make queries of the database (or calls of the oracle function) to find one of these entries. The Grover algorithm, shown in Fig. 13, only requires calls of the oracle function—not an exponential speedup, but still sizeable when the database is large.

The first step initializes the index register in the now very familiar equal-superposition state . This is followed by a sequence of operations , known as the Grover iterate, in which the oracle gate is called once. Its action is defined to flip the phase of all solutions: . As we have seen in the Deutsch-Josza algorithm, this can be achieved with a function gate acting as , where the oracle qubit is initialized as (Fig. 13 reserves workspace qubits for the implementation of the oracle). The Grover iterate also contains a conditional phase gate , which inverts the phase of all computational basis states with exception of the state . This is embedded into Hadamard gates, resulting into

The Grover iterate can therefore be written as

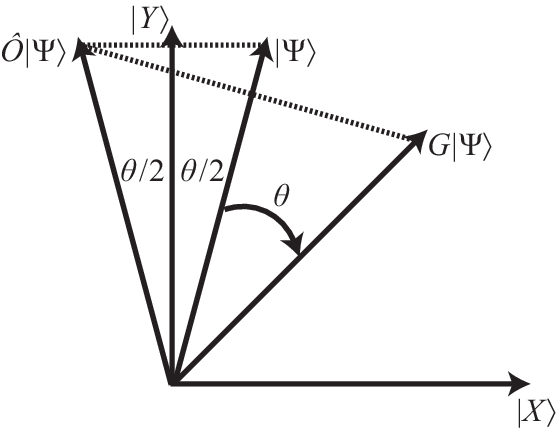

The purpose of the Grover iterate is to rotate the initial state into the direction of the equal superposition of solutions , where we have enumerated all solutions as , . This rotation takes place in a plane spanned by and , the equal superposition of all non-solutions , . Figure 14 illustrates how this works. The initial state can be written as

and therefore lies in the , plane. The unit vector

can be parameterized in terms of the angle between and the axis. For , is small, such that is almost parallel to the axis.

Per definition, the oracle function acts as

which amounts to a reflection about the axis. The phase gate performs another reflection, now about the axis. As a result, the state is rotated by an angle towards the axis. Repeating this rotation times rotates the state vector close to the axis—the misalignment will be less than . With high probability, a measurement of the final state will therefore deliver a solution of the search problem.

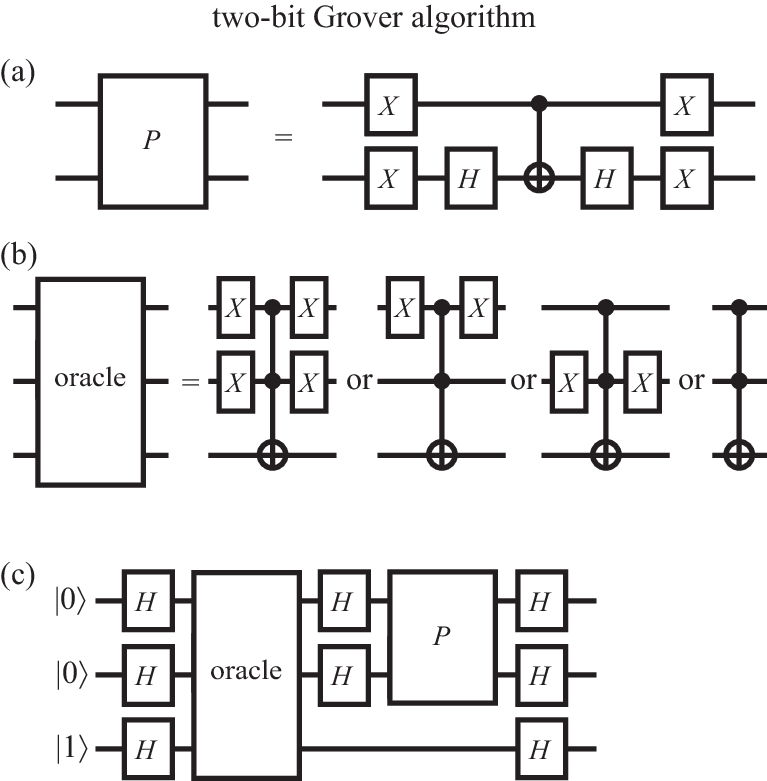

An instructive example is a two-bit search with one solution , depicted in Fig. 15. Panel (a) shows a realization of the gate with , , and CNOT gates (as a matter of fact, this realizes , but an overall phase factor of a quantum state is non-detectable). Depending on the binary representation of , the oracle function takes one of four possible forms, which can be implemented using Toffoli (CCNOT) and (NOT) gates as shown in panel (b). For , , the angle of the initial state vector with the axis is . The Grover iterate rotates the state by . A single iteration therefore rotates the state onto the axis, which immediately identifies the matching entry . In contrast, a classical search would on average require oracle calls before the solution is found.

V.4 Quantum Fourier transformation

Consider the Fourier transformation

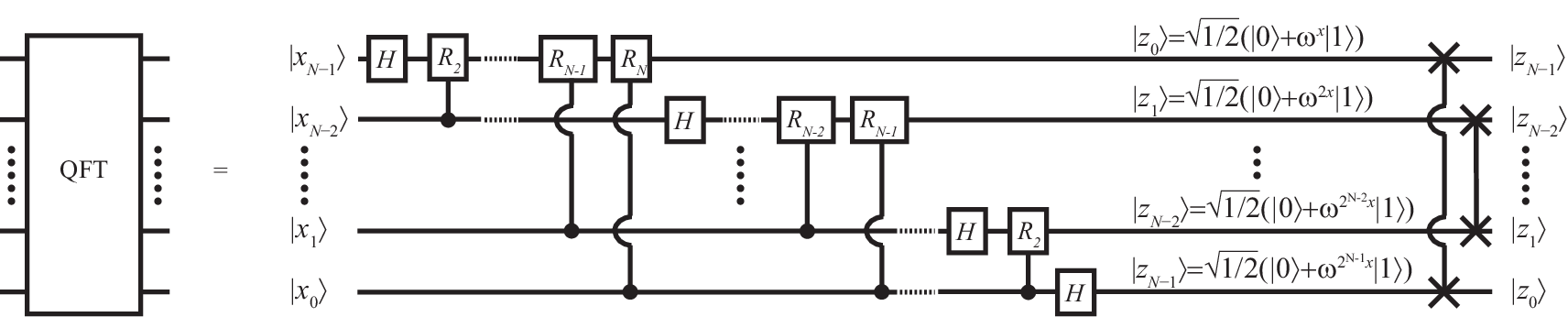

of an -bit function . Here, we abbreviated , such that (where is an ordinary, not bitwise, multiplication). Classically, implementation of the Fourier transformation takes elementary gate operations. In contrast, its quantum-mechanical analogue, the quantum Fourier transformation

can be implemented with gate operations, which constitutes an exponential increase in efficiency that can be exploited in a range of algorithms.

The corresponding circuit is shown in Fig. 16. The verification that the depicted circuit computes the quantum Fourier transformation can be based on the product representation

where . Because of the periodicity of the phase, the phase factors can be written as , where we introduced the binary fractions

in a notation analogous to the one denoting fractional decimal numbers. As shown in the figure, the required transformation of each qubit can be achieved efficiently by first applying a Hadamard gate, followed by phase rotation gates

that are controlled by the less significant qubits.

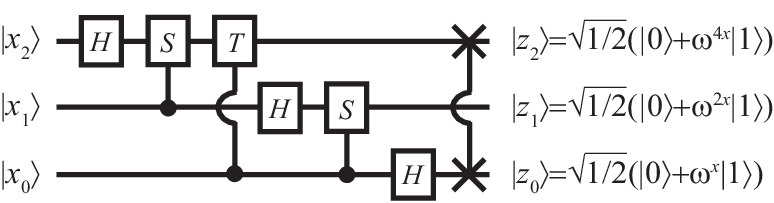

An instructive example is the three-bit quantum Fourier transformation, where . In this case, is the gate, and is the phase gate. The corresponding circuit is shown in Fig. 17.

V.5 Applications: From phase estimation to prime factorization

Phase estimation.—A key application of the quantum Fourier transformation is the estimation of the (reduced) phase of an eigenvalue of a unitary operator , where . This can be used for a problem called order finding, which in itself is a central step in the prime factorization of numbers. Phase estimation can also be used for a problem know as quantum counting. These problems are briefly discussed later; at the moment it suffices to know that they involve different operators .

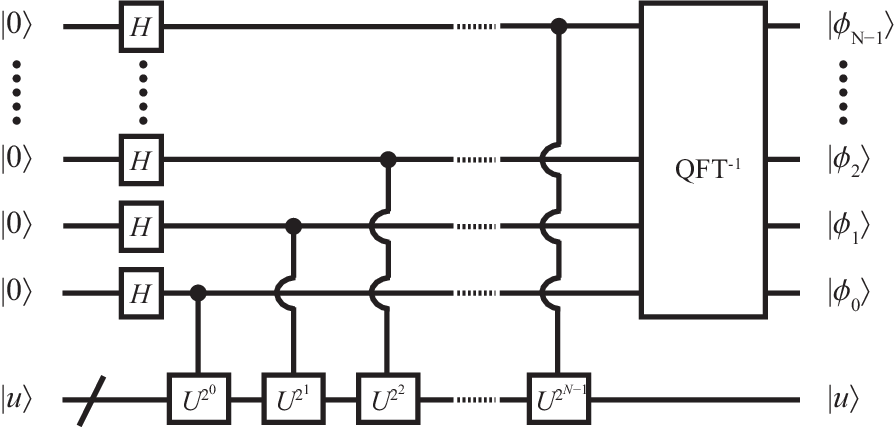

Given the eigenstate corresponding to , can be estimated efficiently as an bit binary fraction using the circuit shown in Fig. 18. Since and , where , the controlled- operations in the first part of the algorithm transform the qubits into the state . With -bit precision, , where the integer number has the -bit representation . The intermediate state can therefore be approximated by the product representation

of the Fourier-transformed -bit estimate of , whose binary digits are then recovered by an inverse Fourier transformation.

Quantum counting.—A straightforward application of the phase estimation algorithm is the determination of the number of solutions in an -item search problem, as encountered in the Grover search. In the - plane, the rotation by one Grover iteration can written as the

which is a unitary matrix with eigenvalues . We also know that lies in the - plane, and therefore is a superposition of the two corresponding eigenstates, which can be fed into the slot for .

Order finding.—Phase estimation can also be used to solve the following number-theoretic problem: given two numbers and without common divisors, what is the order of , defined as the smallest positive integer such that ? Here, the modulo operation determines the remainder of the division by . The order can be obtained by estimating the phase of the eigenvalues of the operator (for ; otherwise , which guarantees that is unitary). Conveniently, the sum of eigenfunctions

is independent of , . Therefore, initializing , the phase estimation algorithm will deliver an approximation of , where each value in the range appears with equal probability . This suffices to reconstruct the order (most efficiently, by a method based on continued fractions).

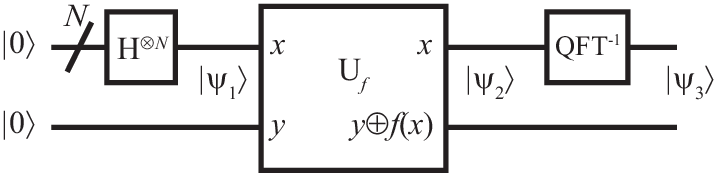

Period finding.—Note that the order is the period of the function , i.e., . It is noteworthy that a variant of the procedure above can be used to find the period of any integer-valued function. The corresponding circuit is shown in Fig. 19. The indicated intermediate states are and

where, as before, denotes the -bit estimate of , and the tilde indicates the Fourier-transformed state. Furthermore, we abbreviated

The inverse Fourier transformation converts this into the final state

so that the measurement of the output qubits in the first register delivers an -bit approximation of .

Prime factorization.—In a loose mathematical sense, the factors of an integer number can also be interpreted as ’periods’ of that number. Indeed, it turns out that the order-finding problem is equivalent to the problem of prime number factorization, and therefore can be solved efficiently using phase estimation. This is embodied in Shor’s factorization algorithm. Because the accurate description of this algorithm requires various number-theoretic concepts, we here only mention one key ingredient: assume we have randomly chosen a number and found that the order of is even (otherwise, start again with another randomly chosen ). Then is still an integer, which fulfills

Furthermore assume that (otherwise, start again…). It then follows that or shares a nontrivial factor with . The largest factor (the greatest common divisor, gcd) can be found efficiently using Euclid’s algorithm: given , iterate the identity until the smaller number is a divisor of the larger number; the smaller number is then the gcd of and , which delivers a factor of .