Gaussian Processes

5th February 2016

This was our second week in of the STOR-i Research Topic Presentations. This week, there were 6 overall topics covering quite a

wide range of Statistics and Operational Research.

Monday's topic was Medical and Bio Statistics and involved four talks. The first Variational Bayes with applications in RNA sequencing,

statistical models to help Doctors make decisions and the third and fourth talks were talking about clinical trials (though not about

using Multi-Armed Bandits as in Why Not Let Bandits in to Help in Clinical Trials?).

On Tuesday, we were given an introduction to Extreme Value theory and we touched on how it can be used in environmental situations,

as well as how covariance and dependence can be incorporated into the modelling. Wednesday was a busy day, and included two research

topics. In the morning, Statistical/Machine Learning took the lead. We had four talks looking at how privacy can be given a mathematical

definition, the theory behind the classification problem, clustering problems using projections and Gaussian processes. After an hour

off for lunch we started all over again on Logistics, Transportation and Operations. Not surprisingly, this was a set of far more

practical talks, looking at various problems from the real world. These included disaster management and evacuations and airport

capacity. The order of the day on Thursday was on Business Forecasting. We had two speakers, the first talking about how traditional

methods for continuous cases can be adapted for discrete data predictions. The second speaker spoke about forecasting when the data

can be grouped in a hierachical manner. Our final research topic, today, was on simulation. The first talk spoke about how some work

on queueing theory and how infinite server queues can be used in health care modelling, whereas the second was about simulation itself.

In the next two blog posts, I intend to decribe two of these topics in more detail. The first of these I would like to discuss is

the section of Statistical Learning on Gaussian Processes (GP). This was given by

Chris Nemeth, who captivated us all with a fancy presentation with

apparently moving graphs. (We did know that it was really just different slides.) Consider a set of Gaussian random variables

$\{X_1,...,X_n\}$ in which the covariance of $X_i$ and $X_j$ decreases as $i$ and $j$ get further apart. If one fixes $X_1$, then the

variance of $X_2|X_1$ may be quite small, but $Var(X_{10}|X_1)$ may not be affected that much. Fortunately, as each random variable is

Gaussian, conditioning any variable on another is also Gaussian, whish does make the maths much easier to deal with.

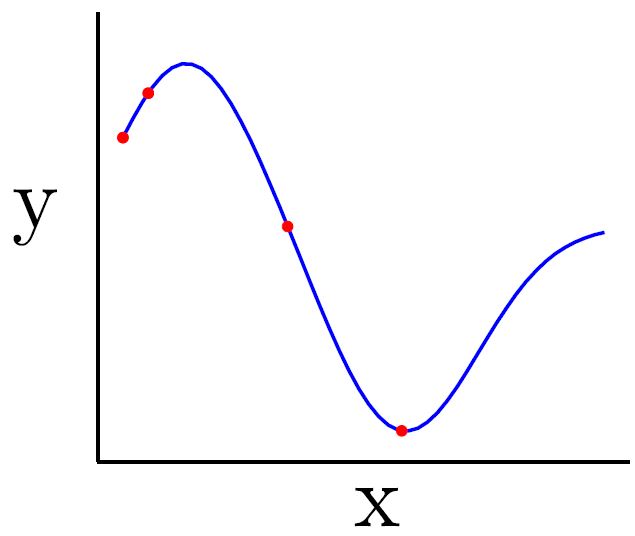

If we go further and fix more random variables, $\{X_{i_1},...,X_{i_m}\}$, then the variance of the random variables between the fixed

points is restricted. But there is a variance that can be modelled and may be very important, particularly if these random variables are

being used to give some form of non-linear regression for some points. The data points can be considered as the fixed points of a set of

random variables as described above. We can approximate the non-linear regression function for these points by using means and variances

of random variables in a set similar to that of the above description. The approximation gets better and better as we take more and more

random variables, though the error margins are still included in the model. This is the basis of Gaussian processes for use in non-linear

regression.

Figure 1

The formal definition of a GP is the following:

$$\text{A Gaussian Process is a collection of random variables, any finite number of which have (consistent) Gaussian distributions.}$$

It is a generalisation of a multivariate Gaussian distribution to infintely many variables. It can be considered as treating a function,

$f(x)$,

itself as random variable. It is fully specified by a mean function $m(x)$ and a covariance function $K(x,x')$:

$$f(x) = \mathcal{GP}(m(x),K(x,x'))$$

Fortunately, despite its infinite size, the marginalisation property means that these can be stored on a computer and thus is still

practically useful. In regression, the general model considered is $y(x)= f(x) + \epsilon\sigma_y$ where $\epsilon$

is 0,1 normally distributed. The prior given to $f$ is a GP, which means that the distribution for $y$ is also a Gaussian

process. The choice of covariance function is

$$K(x,x') = \sigma^2\exp(-(x-x')^2/(2l^2)).$$

This only depends on the distance between the two points and two hyperparameters. The choice of $l$ is very important, as it will determine how

quickly the covariance between difference points in the line tends to 0. If it is too small, $f(x)$ will over fit the data, chosen too large and

it will tend to a linear function and loose all of the non-linear information. The choice of $\sigma$ alters the how far $f(x)$ is allowed

to vary from its mean. The aim is to use these hyperparameters and the information recieved from the data to find the parameters of the

distribution of $y$.

Unfortunately,the computational cost when using $N$ test points and $M$ train point is of order $\mathcal{O}((N+M)^3). This computational

cost is a big limitation, with the largest number of variables that can be coped with is of the order 1000. Sometimes, a special

structure can help with reducing the cost. It seems like quite a clever idea, and certainly more appealing to me than the usual non-linear

regression techniques. The use of Gaussian distributions also helps everything to be much nicer and tractable to work with.

After Chris had gone over the basics, he then spoke about how GPs can be applied to different areas. One of them was

Bayesian Optimisation. If you have a set of points for which you wish to evaluate a performance function then there are a

number of ways of

going about it, some of which were discussed in Optimising with Ants and Ice-cream Cones. If these

evaluations are costly though, then the choice of which points to evaluate is difficult. One way is to consider the performance

measure as a Gaussian Process. Each evaluation will change the mean and variance in different areas, and this can then be used to

choose where the next observation should be made to optimise the information gained from each trial (see Figure 2). Although this

seems like a

heuristic (not normally my cup of tea), it may be quite interesting to look into this area. GPs also have potential use in

probabilistic

numerical, where performance may be better than Monte Carlo methods, and in classification problems.

References

[1] Figure 1 copied from slide 5 of Chris Nemeth's presentation.

[2] Figure 2 copied from slide 254 of Chris Nemeth's presentation.

Figure 2