5 Web scraping, interactive graphics, Shiny Apps, package building, and more

5.1 Packages

Install the new packages

rvestandggvis. It will also be worth installingreadrandpurrr, if you haven’t done so.Load in the new packages and

ggplot2. Collectively, you can load inreadr,purrr&ggplot2viatidyverse:

library(tidyverse)

library(ggvis)

library(rvest)5.2 Collecting data

So far the data we’ve used has been provided for you. More importantly, it’s been given to you in a nice format (e.g. no missing values). In practice you’ll find that most data are messy and that analysing real data requires you to spend time cleaning and manipulating the data into a useable form before you can do your analysis.

One of the best sources of data is the internet. There are now over a billion websites containing data on almost anything you can think of (e.g. income, world poverty, property values, film releases, etc.).

5.2.1 Simple scraping

In Chapter 0 we looked at a dataset containing information about planets in our solar system. In that exercise we input that data manually. However, we can obtain these data by scraping the data directly from the web.14

The rvest package from Hadley Wickham will allow us to read the data directly from the website. So let’s try it out. Start by loading the package if you haven’t already.

library(rvest)Once we’ve found a good website containing the data we want, we can scrape the data from the website and store it in a data.frame object

html <- read_html("http://nssdc.gsfc.nasa.gov/planetary/factsheet/")

html_data <- html_node(html, "table") # extract parts of HTML text

planet_data <- html_table(html_data, header = TRUE) # display table

head(planet_data)## # A tibble: 6 × 11

## `` MERCURY VENUS EARTH MOON MARS JUPITER SATURN URANUS NEPTUNE PLUTO

## <chr> <chr> <chr> <chr> <chr> <chr> <chr> <chr> <chr> <chr> <chr>

## 1 Mass (102… 0.330 4.87 5.97 0.073 0.642 1898 568 86.8 102 0.01…

## 2 Diameter … 4879 12,1… 12,7… 3475 6792 142,984 120,5… 51,118 49,528 2376

## 3 Density (… 5429 5243 5514 3340 3934 1326 687 1270 1638 1850

## 4 Gravity (… 3.7 8.9 9.8 1.6 3.7 23.1 9.0 8.7 11.0 0.7

## 5 Escape Ve… 4.3 10.4 11.2 2.4 5.0 59.5 35.5 21.3 23.5 1.3

## 6 Rotation … 1407.6 -583… 23.9 655.7 24.6 9.9 10.7 -17.2 16.1 -153…Essentially we’re going to be working with four main functions:

read_html()- used to set the webpage addresshtml_node()/html_nodes()- select which parts of the website you want to scrapehtml_table()- convert an HTML table into adata.frameobjecthtml_text()- extract HTML text.

Used correctly, these functions will allow you to scrape data from alot of websites. However, as you can imagine, the more complex the design of the website, the harder it will be to identify what you’re looking for.

You may find the https://selectorgadget.com tool particularly useful. This tool can be added as an extension to Google Chrome.15 When activated you can simply hover your mouse over the section of the website you want to scrape and the selectorgadget tool will tell you what the xpath/CSS link is.

Piping is also helpful when running web scraping code. Piping allows you to direct the output from one function to another and then another and then another, etc. by using the %>% operator from the dplyr package. As an example, here in an alternative way of implementing the above:

planet_data <-

read_html("http://nssdc.gsfc.nasa.gov/planetary/factsheet/") %>%

html_node("table") %>%

html_table(header = TRUE)You’ll notice by comparing the two implementations, that function(a, b) is equivalent to a %>% function(b). We are plugging our variable into the first argument of the function. If instead we want function(b, a), then we’ll need to use the form a %>% function(b, .).

5.3 Alternative graphics

By now we are familiar with using the ggplot2 package instead of the base R graphics engine to produce figures. Howewever, we are going to cover few extra packages now.

5.3.1 Plotly

Plotly is an open-source data visualization library for R. It allows you to create interactive plots that you can use in dashboards or websites (you can save them as static images as well). The ggplotly function from the plotly package makes it easy to convert ggplot2 graphics to interactive plotly graphics. Install the package and then load it.

install.packages("plotly")Let’s take a look at an example using the mtcars dataset. The mtcars dataset contains data on 32 cars from 1973–74. Let’s start by plotting the horsepower (displacement) against the miles per gallon.

library(plotly)

# Load mtcars dataset

data(mtcars)

# Create a ggplot

p <- ggplot(data = mtcars, aes(x = mpg, y = disp, color = cyl)) +

geom_point(size = 3) +

labs(title = "Miles Per Gallon vs Displacement",

x = "Miles Per Gallon",

y = "Displacement",

color = "Cylinders")

# Convert ggplot to a plotly object

ggplotly(p)In this example, we create a scatter plot using ggplot2, and we convert this ggplot2 graphic into an plotly graphic using the ggplotly function. The resulting plot is interactive and can be explored by hovering over the points, zooming in, and more.

If you run this code in an environment that can handle interactive graphics, like RStudio or a Jupyter notebook, or if you knit it to an html (like with this webpage!) you’ll be able to move around with your cursor and interact with it in real time. You can even embed your graphics in a responsive web environment!

If you wish to perform data exploration, or present your data, I recommend creating a ggplot plot and compiling it with plotly.

5.3.2 ggvis

Now we’re going to look at another package, ggvis, which you’ll notice has the same graph building philosophy, albeit with a slightly different syntax. Install the package and then load it.

install.packages("ggvis")library(ggvis)We’re going to practice some of the basics of ggvis with a demo dataset that can be found in the R base package.

# first layer is the points of a scatter plot

# second layer is a simple linear model,

# SE=TRUE displays confidence bans around the predictions

mtcars %>%

ggvis(~hp, ~mpg, fill := "red", stroke := "black") %>%

layer_points() %>%

layer_model_predictions(model = "lm", se = TRUE) ## Guessing formula = mpg ~ hpThis plot can be broken down into smaller components in the same as ggplot graphics are built-up from smaller components. In addition to what we had above, we’re plotting the data and overlaying a straight line, which is the prediction from a linear model that we’ve fitted to the data. We have also added pointwise 95% confidence interval bands to our linear model.

Horsepower is related to the number of cylinders in the engine. So let’s group the data into number of cylinders and then, treating each group independently, fit a new linear model. Therefore, we are fitting a piecewise linear model.

## piecewise linear

mtcars %>%

ggvis(~wt, ~mpg, fill = ~factor(cyl)) %>%

layer_points() %>%

group_by(cyl) %>%

layer_model_predictions(model = "lm", se = TRUE)## Guessing formula = mpg ~ wt## the group_by(cyl) tells next layer to group by cylinder factorThe plot shows three separate lines, with their corresponding 95% confidence intervals.

5.3.3 Interactive/responsive graphics

You might be wondering, why should I use ggvis when I can use ggplot + plotly? Well, good question. They are almost en-par with features. However, one of the main advantages of ggvis is that you can make more sophisticated interactive graphics (they will be again viewed with a browser to achieve this) that the ones in plotly. This can be particularly cool if you wish to manipulate parameters of your statistical analysis and see them in real time.

The following code generates a basic interactive plot, when it is run alone in R/RStudio console. Read the warning to see why it is not interactive here.

# In this plot we have:

# a smoothing layer with input selection for span

# and a point layer with input selection for size

vis0 <- mtcars %>%

ggvis(~wt, ~mpg, fill := "purple", stroke := "black") %>%

layer_smooths(span = input_slider(0.5, 1, value = 1)) %>%

layer_points(size := input_slider(100, 1000, value = 100))

vis0## Warning: Can't output dynamic/interactive ggvis plots in a knitr document.

## Generating a static (non-dynamic, non-interactive) version of the plot.This particular plot has two sliders which you can play with to:

- adjust the size of the data points;

- adjust the smoothness of the line.

If you decrease the smoothness, then the line will start to become more wobbly and intersect more points, whereas if you increase the smoothness, the line will instead capture the general shape of the data, rather than specific points.

Note the message in the RStudio Console “Showing dynamic visualisation. Press Escape/Ctrl + C to stop”. You must press either of these key combinations, or the Stop icon in the RStudio Viewer, to continue submitting R commands in your R session.

When modelling a dataset we often make assumptions about how the data is generated. For example, do the residuals follow an underlying Normal distribution, and if so, can we estimate it’s parameters. Alternatively, the data may be distributed as a Poisson, Gamma, or Chi-squared distribution. It’s not always appropriate to assume that the data fits some pre-specified distributional family, especially if we don’t have a lot of data. Instead, we may want to model the data without making such distributional assumptions. In statistics this is known as nonparametrics, and the most common type of nonparametric density estimation is kernel density estimation. Further details can be found here https://en.wikipedia.org/wiki/Kernel_density_estimation.

Essentially kernel density estimation replaces each data point with a kernel function (e.g. Gaussian, Epanechnikov), and aims to approximate the underlying population density by smoothing your data (which is assumed to be a sample from the population).

Run the code below and see what happens when you change the kernel function and smoothing parameter (more commonly known as the bandwidth).

# In this plot we have an input slider to select the bandwidth of smoother

mtcars %>%

ggvis(~wt) %>%

layer_densities(

adjust = input_slider(

.1, 2, value = 1, step = .1,

label = "Bandwidth adjustment"

),

kernel = input_select(

c("Gaussian" = "gaussian",

"Epanechnikov" = "epanechnikov",

"Rectangular" = "rectangular",

"Triangular" = "triangular",

"Biweight" = "biweight",

"Cosine" = "cosine",

"Optcosine" = "optcosine"),

label = "Kernel"

)

)## Warning: Can't output dynamic/interactive ggvis plots in a knitr document.

## Generating a static (non-dynamic, non-interactive) version of the plot.The plot allows you to choose which kernel you are using and also to adjust the bandwidth. This is much more convenient than plotting many different plots.

5.4 Shiny Web Applications

The team behind RStudio have created an application framework which allows the user to create web pages which R code. One example, of this is we can create interactive plots like those generated by ggvis, however using whichever plotting environment we prefer. But these apps can achieve much more, being even capable of creating a front-end to your whole statistical analysis. Within an app, you can import, analyse and plot your data completely, so when combined with tidyverse, ggplot and plotly, you can build from scratch powerful Statistical and ML tools in matters of hours.

To create a new app in RStudio follow this procedure:

- click the New icon | Shiny Web App…

- Give yours a name with no spaces such as ``example-shiny-app’’.

- Choose the single of multiple file approach to coding the app (it doesn’t really matter which you choose)

- and choose which directory (folder) that you want to make it in.

If you chose the single file approach you now get a single file called app.R to edit. However the placeholder code provided by RStudio will actually run. Click the “Run App” button in the top right corner of the Source pane. RStudio will open a new Viewer window running the app.

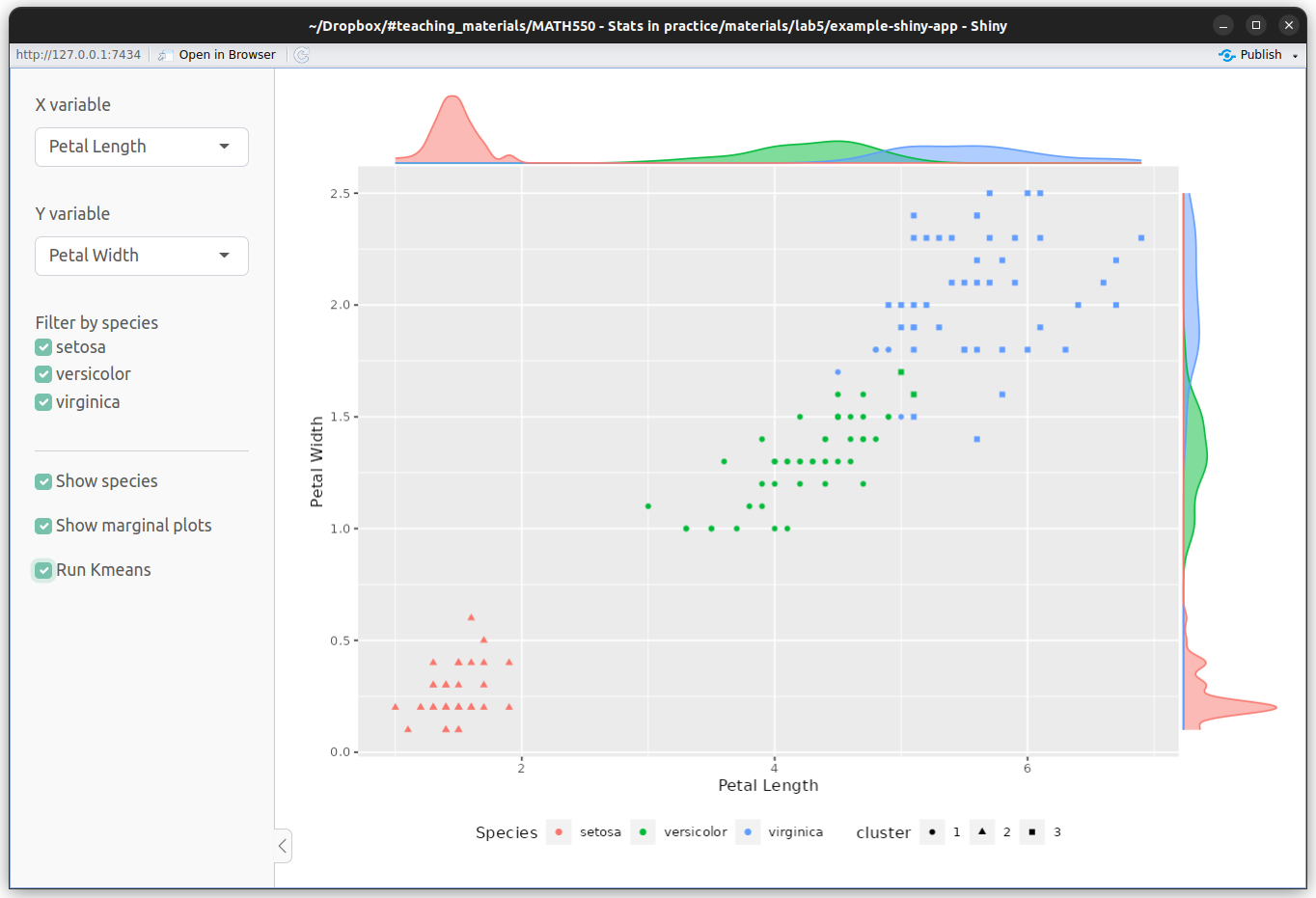

Figure 5.1: Example Shiny App by RStudio.

As with the ggvis interactive plots you see that you can move the slider with your mouse and R recalculates and redraws the plot. Experiment with moving the slider.

Shiny Apps are very flexible because they need not just produce plots but they provide Web frontends to any type of R code. So you could be providing access to web scraping code or access to a database.

Inspect the structure of the code. It is very simple. There are two main functions ui() (which stands for user-interface) and server() which is the part of the code doing the computation/plots. You would edit these functions to perform the task you needed to achieve.

Whilst your app is running you will notice that the RStudio console reports “Listening on http://127.0.0.1:4122”. In order to be able to resume your R session you must close the window your app is running in.

5.4.1 Other RStudio features we haven’t covered

RStudio has many features we haven’t covered. It has brilliant features when you are building your own package. A previous lecturer of this module said personally they would never build a package outside of RStudio anymore. (Personally, I only do it when testing packages with self-build R versions) When building a package and some other tasks you need the RStudio “Build” pane. To see this I think you must define the top level directory for your package (or bookdown book etc.) as an RStudio project. This creates an .Rproj file in the directory which RStudio detects and then shows the Build pane.

RStudio can also run Sweave documents (which contain both LaTeX and R code) and also many types of presentation including LaTeX beamer and RStudio’s .Rpres format.

5.5 Rmarkdown HTML notebooks and Ipython/Jupyter notebooks

You may have already written Rmarkdown files to generate documents by now. An advanced type of Rmarkdown document is an “R Notebook”. These are R markdown files with the output in the YAML header defined as

output:

html_notebook: defaultWhen you first save an Rmarkdown file with this output definition its output file with extension .nb.html is created immediately (even if you have not evaluated any of your code blocks).

The .nb.html file format is very clever because it actually contains the Rmarkdown code as well as the html output. Therefore, if you open an .nb.html file in RStudio it will show the associated .Rmd code even if you don’t have that separate file.

The R output shown in the .nb.html file is the output from the code blocks which have been run, i.e. if you have not evaluated all of the R code blocks in your Rmd file then not all of the output will be present in the .nb.html file.

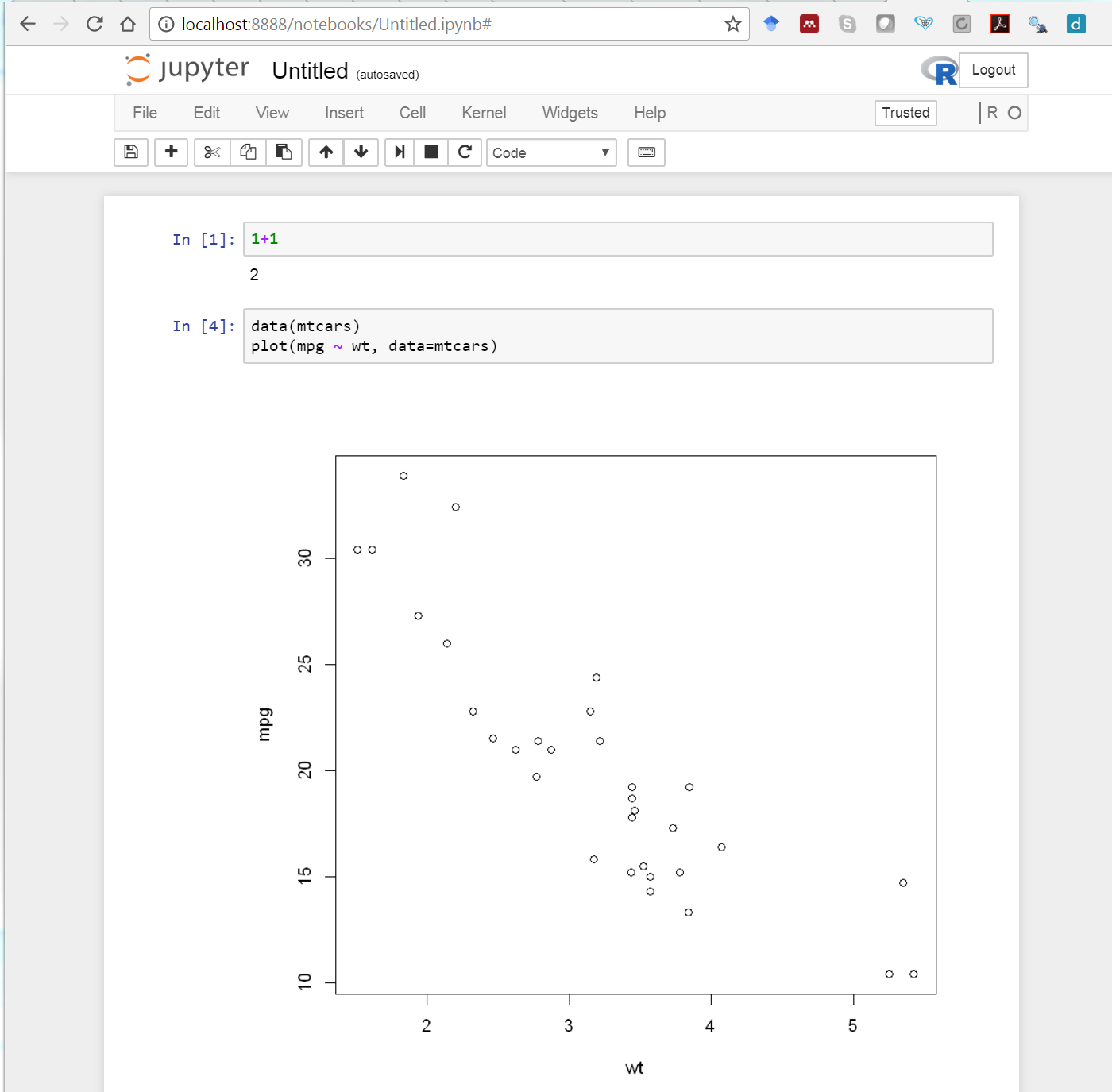

The other main notebook format used in Data Science are Ipython/Jupyter notebooks https://jupyter.org/. These keep the R read-evaluation-print-loop running in the background so you can evaluate one cell of code at a time live in your browser. A screen shot of an example notebook is shown at side of the page.

Figure 5.2: Example Jupyter notebook.

To try a Jupyter notebook without having to install it on your local machine, you can try it in a browser at https://jupyter.org/try. However, some didn’t have good experience with this website. Either the R kernel dies or you cannot get a space to launch a notebook. Running Jupyter notebooks on my own machine has been reliable.

If you want to try such notebooks installation instructions are here: https://jupyter.org/install.html. This is easiest to do on your own computer. If you are on a University Windows network computer these will probably fail. One element of getting Jupyter notebooks running is installing the IPython/Jupyter R kernel (the notebooks can use kernels for other languages such as Python, Julia, and many other languages). Instructions can be found here https://irkernel.github.io/installation/.

5.6 Exercise: Housing in Nairobi

Caution: The code in this section may not work if the website changes its structure and layout.

We’ve now covered the basics of the rvest and ggvis packages. We’re now going look at combining these packages, along with other tidyverse packages to perform statistical analysis on dataset we have scraped from the web.

The property market is a rich source of data which contains many interesting nuances. For example, just because a property is big, doesn’t mean it’s expensive.

Have a look at https://kenyapropertycentre.com to get an idea of its layout. Try searching for some properties. The code below will extract the address, price, the number of bedrooms and bathrooms, and when the advert was added of each property in the search results:

listings <- read_html("https://kenyapropertycentre.com/for-sale/houses/nairobi/showtype?page=1") %>%

html_elements(".property-list")

# extract html details

address <- listings %>%

html_element(".voffset-bottom-10 strong") %>%

html_text()

price <- listings %>%

html_element(".price+.price") %>%

html_text() %>%

readr::parse_number()

beds <- listings %>%

html_element(".fa-bed+ span") %>%

html_text() %>%

readr::parse_number()

baths <- listings %>%

html_element(".fa-bath+ span") %>%

html_text() %>%

readr::parse_number()

date_added <- listings %>%

html_element(".added-on+.added-on") %>%

html_text()

housing <- data.frame(address, price, beds, baths, date_added)You may get some warnings/errors when you run this code. Let’s ignore those for the moment. You’ll find scraping data from website is never trivial. Web developers design their sites to be pretty and not scraper friendly. Companies that specialise in web scraping spend a significant portion of their time updating scrapers when websites are updated.

Your task is to utilise what you’ve learnt over the past few weeks to perform some statistical analyses on these data. Here are some suggestions for things to explore.

- Some values may be missing. Find a way to impute the missing values for some properties. You will need the functions with the prefix

mapfrom thepurrrpackage, which we didn’t have time to cover. - Can we write a function to trim the code above, as almost all vectors are created by the use of

html_nodes(),html_text(), andreadr::parse_number()? - As we saw with the Google Scholar data, when we scrape data from a website we’re only scraping what is on that particular page. This is fine, but if the data we want is over multiple pages then we need to be clever. Try to use a

forloop to loop over each webpage and scrape the data you want. Hint: Most websites follow a logical naming and numbering structure. - Do the same thing for London, UK (or any other city of your choice). A possible website to look at is https://www.zoopla.co.uk/.

- It’s always a good idea to take a look at the data. The two most obvious functions to use here would be

View()andhead(). - It’s also a good idea to look at some simple summary statistics, such as mean, median, max, etc. Another useful function for summarising your data is

summary(). Note that this function will only work on numeric class data. If there are any non-numeric values in your data fix your data frame so that the appropriate columns are of class numeric. - You may want to look at the price as a function of the date.

- Create an interactive

ggvisplot to explore one or more variables.

Explore the data set. Be creative!

R was not really designed for this sort of task. Other programming languages, such as Python, are generally better for web scraping.↩︎

Search for this in the Chrome Web Store https://chrome.google.com/webstore/category/extensions↩︎