Style control - access keys in brackets

12.1 Motivation

TheoremExample 12.1.1 London homicides

A starting point is typically a subject-matter question.

Four homicides were observed in London on 10 July 2008.

Is this figure a cause for concern? In other words, is it abnormally high, suggesting a particular problem on that day?

We next need data to answer our question. This may be existing data, or we may need to collect it ourselves.

We look at the number of homicides occurring each day in London, from April 2004 to March 2007 (this just happens to be the range of data available, and makes for a sensible comparison). The data are given in the table below.

| No. of homicides per day | 0 | 1 | 2 | 3 | 4 | |

| Observed frequency | 713 | 299 | 66 | 16 | 1 | 0 |

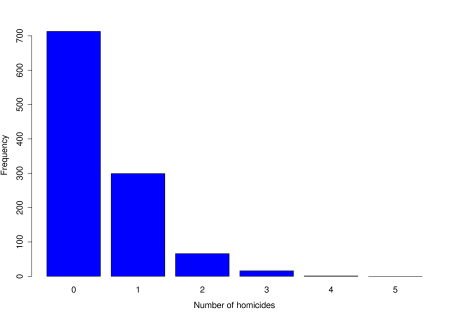

Before we go any further, the next stage is always to look at the data through exploratory analysis.

Next, we propose a model for the data to begin addressing the question.

It is proposed to model the data as iid (independent and identically distributed) draws from a Poisson distribution.

Why is this a suitable model?

What assumptions are being made?

Are these assumptions reasonable?

The probability mass function (pmf) for the poisson distribution is given by

for , so it still remains to make a sensible choice for the parameter . As before, we will make an estimate of based on our data; the equation we use to produce the estimate will be a different estimator than in Part 1.

12.2 What is likelihood?

In the last example we discussed that a model for observed data typically has unknown parameters that we need to estimate. We proposed to model the London homicide data by a Poisson distribution. How do we estimate the parameter? In Math104 you met the method of moments estimator (MOME); whilst it is often easy to compute, the estimators it yields can often be improved upon; for this reason it has mostly been superceded by the method of maximum likelihood.

Definition.

Suppose we have data , that arise from a population with pmf (or pdf) , with at least one unknown parameter . Then the likelihood function of the parameter is the probability (or density) of the observed data for given values of .

If the data are iid, the likelihood function is defined as

The product arises because we assume independence, and joint probabilities (or densities) obey a product law under independence (Math230).

TheoremExample 12.2.1 London homicides continued

For the Poisson example, recall that the probability mass function (pmf) for the Poisson distribution is given by

We will always keep things general to begin by talking in terms of data , rather than the actual data from the table. From the above definition, the likelihood function of the unknown parameter is

Let’s have a look at how the likelihood function behaves for different values of . To keep things simple, let’s take just a random sample of 20 days.

What does the likelihood plot tell us?

Definition.

Maximum likelihood estimator/estimate. For given data , the maximum likelihood estimate (MLE) of is the value of that maximises , and is denoted . The maximum likelihood estimator of based on random variables will be denoted .

So the MLE, , is the value of where the likelihood is the largest. This is intuitively sensible.

It is often computationally more convenient to take logs of the likelihood function.

Definition.

The log-likelihood function is the natural logarithm of the likelihood function and, for iid data is denoted thus:

One reason that this is a good thing to do is it means we can switch the product to a sum, which is easier to deal with algebraically.

IMPORTANT FACT: Since ‘log’ is a monotone increasing function, maximises the log-likelihood if and only if it maximises the likelihood; if we want to find an MLE we can choose which of these functions to try and maximise.

Let us return to the Poisson model. The log-likelihood function for the Poisson data is

We can now use the maximisation procedure of our choice to find the MLE. A sensible approach in this case is differentiation.

Now if we solve , this gives us potential candidate(s) for the MLE. For the above, there is only one solution:

i.e.

To confirm that this really is the MLE, we need to verify it is a maximum:

It is clear intuitively that this is a sensible estimator; let’s formalise that into two desirable features we may look for in a ‘good’ estimator. We recall these properties (which you have met before):

Now plugging in the value of we find that

Plugging in the actual numerical values of the data at the last minute is good practice, as it means we have solved a more general problem, and helps us to remember about sampling variation.

The next step is to check that the Poisson model gives a reasonable fit to the data.

Plotting the observed data against the expected data under the assumed model is a useful technique here.

The fit looks very good!

Let us now compare this to another estimator you already know: the method of moments estimator from Math104.

Definition.

Let be an iid sample from pmf or pdf , where is one-dimensional. Then the method of moments estimator (MOME) solves

The left hand side is the theoretical moment and the right hand side is the sample moment. What this means is that we should choose the value of such that the sample mean is equal to the theoretical mean.

In our example, recall that for a Poisson distributed random variable, . Therefore the MOME for is

Notice that the MLE coincides with the MOME. We should be glad it does in this case, because the estimator is clearly sensible!

Whenever the MOME and MLE disagree, the MLE is to be preferred. Much of the rest of this part of the course sets out why that is the case.

Finally, we need to provide an answer to the original question.

Four homicides were observed in London on 10 July 2008. Is this figure a cause for concern?

The probability, on a given day, of seeing four or more homicides under the assumed model, where , is given by

by Math230 or R.

But what must be borne in mind is that we can say this about any day of the year.

Assuming days are independent (which we did anyway when fitting the Poisson distribution earlier), if is the number of times we see 4 or more homicides in a year, then , and

So there is a chance of approximately that we see 4 or more homicides on at least one day in a given year.

Some R code for the above: