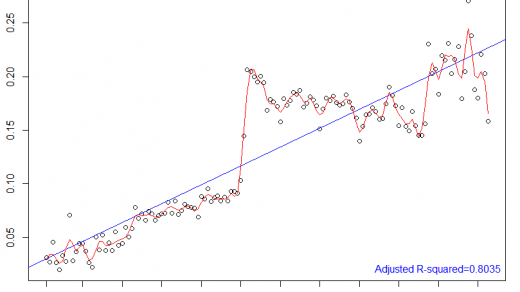

Say you’re a robotics researcher and you want to find the best path for a robot to take in order to perform some tasks. Unless your course is very simple, you’ll probably have to fiddle with some parameters in order to achieve this with confidence, and so you’ve decided to use Bayesian optimisation to cut down on time. You still need to run your robot on the full course every time you evaluate, so if you need good results very quickly this still won’t cut it.

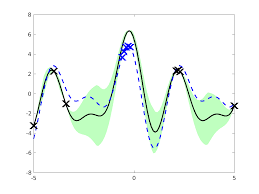

However, if you can perform a fast simulation of your robot’s behaviour that reasonably is close to the truth, you might consider somehow incorporating it into your procedure to speed things up. Enter the domain of multi-fidelity optimisation, where rough-and-ready approximations of the objective function are used to cut down on evaluation time. As long as you can quantify how much information you get from these low-fidelity sources (a non-trivial task!), you can add them to your routine.

STOR-i student Henry Moss’ MUMBO method, presented at the 2020 STOR-i Conference and the inspiration for this post, casts a state-of-the-art Bayesian optimisation method in this framework, with convincing empirical results. Applying it to our toy example, it would balance the cost of and information received from a run of our simulation and a run of our robot on the course as best it can, optimising almost as well as the unmodified method but slashing run time.