This is a bit of a different one, but I think it’s a decent showcase of Occam’s razor. I’ll be looking at how the enemy AI is designed in the video game F.E.A.R.

F.E.A.R., Monolith Productions’ 2005 first-person shooter game, was critically acclaimed for having some of the best non-player character (NPC) AI at the time. The enemy soldiers can dodge, fire from cover and even work together to ambush the player. This makes for an all-around challenging experience, especially given the survival horror theme of the game. I mean, just check out the showcase below!

Given that the AI was partly engineered by an MIT graduate student, this comes as less of a surprise — but what makes it really tick?

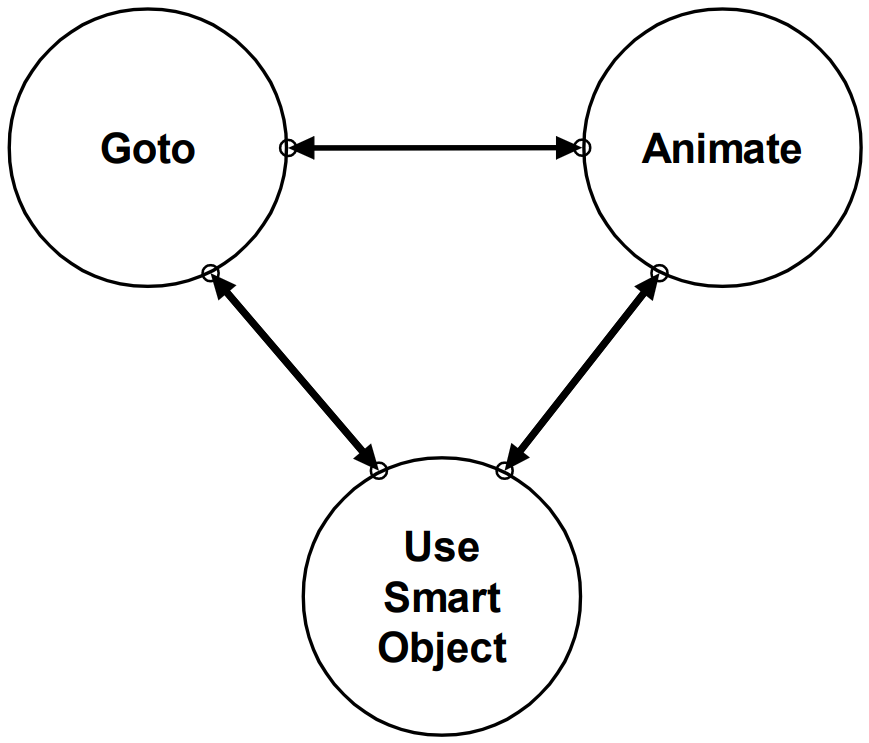

The recipe is deceptively simple. As Jeff Orkin puts it in his Games Developer Conference paper [1], it’s “Three states and a plan.” Since all an NPC does is play animations or move across the map, at any given time it decides on what action to perform based on being in of the three states below.

When the NPC is in the “Goto” state, it moves to a specific location. The “Animate” state specifies an animation from a static position, such as firing a weapon, and the “Use Smart Object” state implies an animation involving a prop (such as flipping a table) is performed.

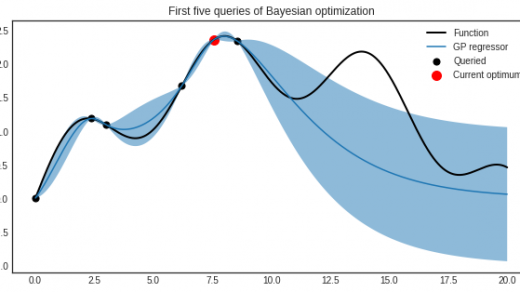

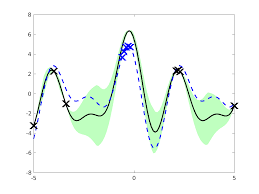

F.E.A.R. uses a planning system to make NPCs swap between these states. Every character gets its own set of goals (such as “Patrol”, “Kill Enemy” or “Ambush”) and its own set of actions (think “Reload”, “Suppression Fire”) to achieve those goals. At any given time, the state of the world (a different kind of state from the three ones above) is modelled as a vector, with the NPC starting in one state and progressing towards the state corresponding to its goal.

Here’s where the maths comes in. The possible states of the world are baked into a graph, with adjacent states being linked by weighted edges representing the cost of the action undertaken to perform the transition. The problem of NPC decision-making now becomes one of graph traversal, so each NPC just has to navigate the weighted graph in a sensible way — Monolith opted for a particularly efficient implementation of the A* shortest path algorithm. A* search is also used more conventionally for map navigation when a “Goto” state is reached.

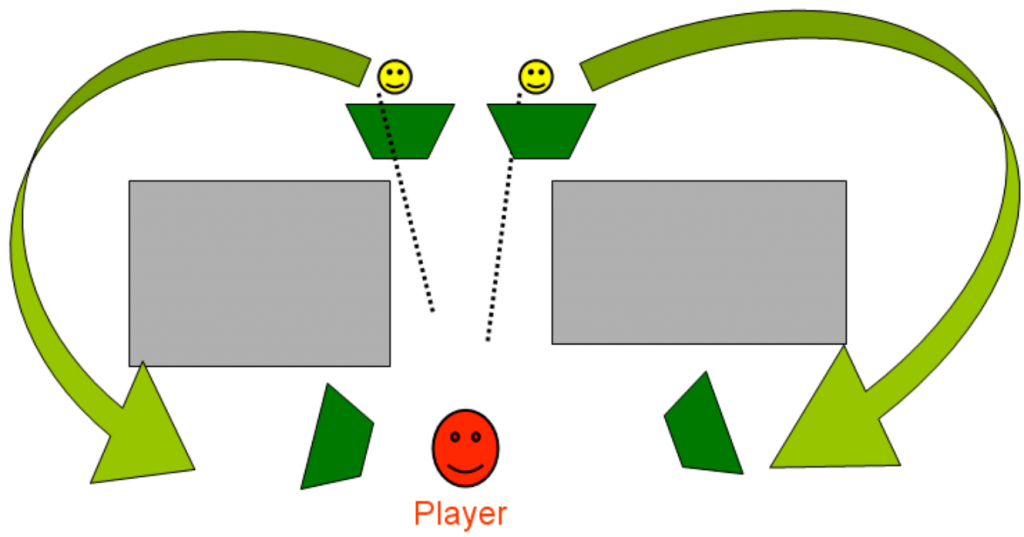

The final piece of the puzzle is a squad manager, which assigns goals according to a pre-specified set of behaviours. It groups NPCs by proximity, creating the illusion of cooperation even though individual NPCs are blissfully unaware of their squad-mates. Complex behaviours can arise, like pincer movements as a consequence of a command to move to cover closer to the player.

And that’s it — no fancy machine learning methods needed. I think the main takeaway here is that solutions needn’t be complicated to be effective. For what is essentially repeatedly solving a shortest path problem, the end result is game AI that can really put the player in a pinch and tie the whole experience together.

Further reading & viewing

[1] Orkin, J. (2006), Three States and a Plan: The AI of F.E.A.R. Proceedings of the Game Developer’s Conference (GDC)

[2] Thompson, T. (2014), Facing your F.E.A.R. Lecture on videogame AI, University of Derby