Imagine that you have a weighted sample of size N and you want to obtain another sample of the same size, consisting of the same elements. One way to do that is by doing a miltinomial sampling, where we sample each point (or particle if we deal with particle filters) proportional to its weight. That way, we will remove particles within the sample that have small weights and resample more the points with higher weights. This, however, reduces the diversity of the sample. What is more, even if all points within the sample have the same weight 1/N, there is a high probability that some of them will not be resampled at all. That why we devote this blog to sampling techniques.

Stratified/Systematic Resampling

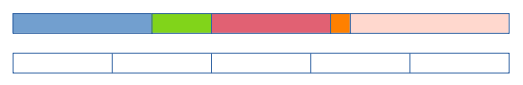

Imagine that we are trying to find a better resampling technique for the particle filters discussed in the previous blogs. Then at each time observation, we will have N particles and their corresponding weights. Stratified resampling essentially divides the population of particles into sections (subpopulations) called strata, [1]. To help us visualise the idea, imagine the normalised weights as sections, such that each section has the length of the corresponding weight, see the figure below. For example, the first particle will have a weight equal to length of the first blue rectangle.

Now, since the sections are equal to the normalised weights, their total length should add up to 1. In the figure, we have considered 5 particles, but you can imagine a lot more, we have just plotted the strata for the first 5. The bottom rectangle represents the interval [0,1], but split into equal sections of length 1/N, where N is the number of particles. Then, the stratified resampling will sample a uniform random point in each of the small rectangles on the bottom and see which particle it corresponds to. Naturally, this is eliminate the particles with very small weights, but will even out to some extent their sampling. For example, if all the particles had the same weight, then in the multinomial resampling there is a high probability that some of them will not be resampled, which is not the case for the Stratified resampling, ensuring the diversity of the particles is not deteriorating. Mathematically, Stratified resampling partitions the [0,1] interval into N disjoint subintervals: [0, 1/N) \cup [1/N, 2/N) \cup \ldots \cup [1-1/N, 1]. Then, sample from N random variables, \{u_{j}^{i}\}_{i=1}^{N} independently in each interval at time j, i.e.:

u_{j}^{i} \sim U\Big[\frac{i-1}{N}, \frac{i}{N}\Big), \quad \text{for} \; i \in {1,\ldots,N}.

Then we can obtain the resampled particles following the argument above. On the other hand, systematic resampling, see [2], exploits the strata in a different way, where the only the first random variable is drawn from a uniform distribution, while the others are determined deterministically by the following procedure:

u_{j}^{1} \sim U\Big[0, \frac{1}{N}\Big),

u_{j}^{i} = u_{j}^{1} + \frac{i - 1}{N}, \quad \text{for} \; i \in {1,\ldots,N}

Now, both stratified and systematic resampling turn out to be of \mathcal{O}(N), but the systematic resampling is more computationally efficient as it requires smaller number of random variables to be generated.

Residual Resampling

Residual resampling consists of two stages, see [3], [4] and [5]. The first stage of the algorithm is to determine which particles have a weight higher than 1/N. Then, it will sample each such particle N_{j}^{i} times, where N_{j}^{i} = floor(Nw_{j}^{i}). The total number of the replicated particles after the first stage will be N_{j} = \sum_{i=1}^{N}N_{j}^{i}. The second stage consists of replicating R_{j} = N - N_{j} number of particles, using a multinomial sampling, but from new (residual) weights, given by (unnormalised):

\hat{w}_{j}^{i} = w_{j}^{i} - \frac{N_{j}^{i}}{N}.

Intuitively, if a particle has been resampled at the first stage, then its residual weight will be reduced, leading to a lower probability of it being resampled again. If the particle has not been resampled in the first stage, then its residual weight the maximum one for this particular particle (in other words the original), hence it has a higher change of being resampled at the second stage. This ensures particle diversity while at the same time removing the particles with negligible weights. Due to the two loop structure, the time complexity of the residual resampling is \mathcal{O}(N) + \mathcal{O}(R_{j}). However, one can remove the computatinally expensive multinomial resampling by conbining the two loops into one. The method is called residual systematic resampling (RSR). In essence, RSR is very similar to systematic resampling. The difference lies in that systematic resampling the random variables {u_{j}^{i}}_{i = 1}^{N} are determined once we know u_{j}^{1}, while in RSR, u_{j}^{i} is updated with reference to the currently considered weight, and for this reason the value of the weight of the previous particle needs to be subtracted from u_{j}^{i}.

Varying sample size

Up to know we have considered different resampling methods where at each time observation we have a choice which results in either propagating the same paths (if the ESS is above some threshold) or resampling the same number of paths that we started with. There could be instances, however, where it could be useful sample more or less particles. Imagine for example, that from one time observation to the next, your ESS deteriorates drastically . This means that we are not exploring the space so much and resampling more particles could be desirable. On the contrary, we also saw that sampling too many particles might only lead to incremental improvements, while linearly increasing the computational time, hence it might be desirable sometimes to sample less particles. One approach, called branch-kill or branching, replicates the i^{th} particle at time j, N_{j}^{i} = floor(Nw_{j}^{i}) + 1 times with probability p, or N_{j}^{i} = floor(Nw_{j}^{i}) with probability 1-p, where p = Nw_{j}^{i} - floor(Nw_{j}^{i}).