Last time we introduced that the limit to the traditional Q-Learning and Sarsa methods lies in the need to store each Q-value for every state-action pair in some giant array, and while this is fine for millions or tens-of-millions of states, there are \(10^{170} \) unique states in the ancient boardgame Go.

For the extremely large scale problems we are limited in applying traditional RL methods, not only by the need to hold in memory the estimated learned values (typically in some kind of giant table or array), but the time required to discover and have our agent complete the array. In such large problems our agent will regularly encounter new states, and so there is need of some general function approximation to represent the necessary value or policy functions. In addition, although not further discussed here, a continuous observation/action space, or an infinite MDP causes significant computational limitations under traditional methods, and so introducing some approximate method becomes necessary in these scenarios aswell.

Common methods include Stochastic Gradient Decent and Linear Function approximation, but I’d like to discuss the application of Artificial Neural Networks to RL. For general approximation methods, Sutton & Barto (2018) has the better part of 200 pages dedicated to this topic.

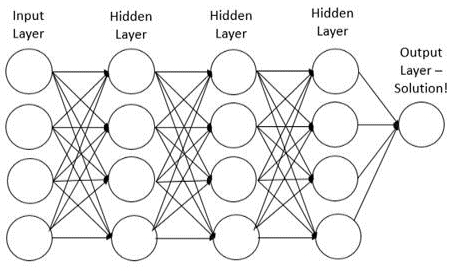

An Artificial Neural Network (ANN) is fundamentally a model designed to imitate how the human brain works. It is a collection of Nodes that each make up different layers where nodes are connected between the layers (see below). Each node contains an activation function which determines what the node outputs. Typically this is a sigmoid function that outputs a binary signal, which is registered by the next layer of nodes deeper into the structure who each in turn perform the same process. Each layer has the objective of learning about some feature of the system and has the goal to minimise some error term. RL looks to use ANNs this way to estimate value functions, maximize expected reward or estimate some policy-gradient algorithm.

Deep Learning (a Neural Network comprised of many layers or DNN) is most commonly associated with image recognition, classification or advanced text generation (See everything the amazing OpenAI team is working on). The general process is the same for DNNs, that, for example, when an image is shown to a neural network the image is split up into different inputs, processed by the network and some output is determined. Any error in output is pushed back through the network impacting the weightings of different nodes/neurons that make up the network. This is the process of Back-propagation and is commonly how both Artificial Neural Networks and Deep Learning systems learn. As Deep Learning tends to learn very slowly, it can be difficult to apply practically. However, in RL our agent can experience thousands of episodes and therefore obtain thousands of training observations very quickly, making Deep Learning specifically very applicable to approximating various learning equations required for RL. This process is called Deep Reinforcement Learning (DRL).

On the 9th April 2015, Google DeepMind Patented an algorithm \\ (https://patents.google.com/patent/US20150100530A1/en) called the Deep Q-Network (DQN). DQN uses a Deep Neural Network to map a high dimensional state-action space into Q-Value estimates, which traditional Q-learning can apply. DQN uses Experience Replay, Target Network and Reward Clipping methods to handle the high variance in learning caused by a typically high correlation in inputs that the agent receives. They achieve this randomising by the order of a set of recent observations to remove the high correlation of observations, and only periodically update the estimates of the state-action values between the Neural Network and the Q-Learning Algorithm. The state-value update step for DQN follows

\begin{equation}\label{DQN}

Q(s_t,a_t) \xleftarrow[]{} Q(s_t,a_t) + \alpha[r_{t+1} + \gamma max_{a} Q(s_{t+1},a | \theta) – Q(s_t,a_t | \theta)],

\end{equation}

where \( \theta \) is the state-action estimated values provided by the Neural Network, hence\( Q(s_t,a_t | \theta) \) is the predicted Q-Value and \( Q(s_{t+1},a | \theta)\) is the target/objective Q-Value of the Neural Network. Lanham (2020) provides a detailed working example in python of DQNs using PyTorch.

Because Deep Reinforcement Learning is designed to be applied to the most complex of scenarios and is capable of handing situations where the agent lacks complete information in an online scenario, some of the most high profile examples of Reinforcement Learning are found to be applying methods from Deep Reinforcement Learning.

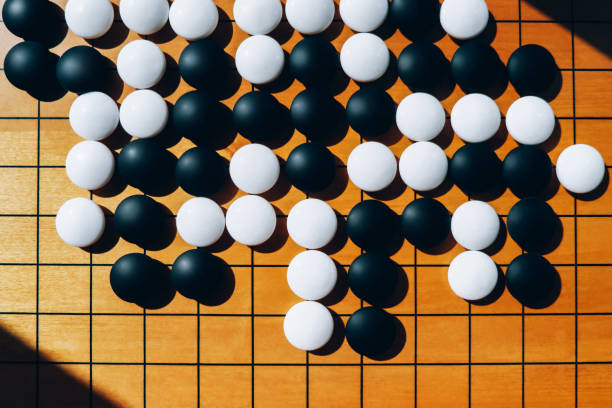

During the last 30 years, humans have been surpassed by Artificial Intelligence in many board games, such as Backgammon and Chess, but the game of Go proved difficult to produce an AI algorithm that could challenge the highest rated players in the world. In 2016, AlphaGo, developed by DeepMind, successfully defeated the at time current 18-time world Go champion Lee Sedol in Korea 4 wins to 1 loss. AlphaGo was designed with a combination of Deep Reinforcement Learning, Supervised learning and Monte Carlo tree search which relied on a huge database of professional human moves. In 2017 DeepMind released AlphaGo Zero as a successor algorithm that relied solely on Deep Reinforcement Learning, learning from playing games with just itself exclusively. The neural network uses an image of the raw board state and the history of states, outputting a vector of probabilities for the agent taking each action next, and a vector of values which estimated the probability of the agent winning given their current state.

The occasion was a significant event, with many analysts predicting that Artificial Intelligence research was more than 10 years away from conquring the game of Go.

Thank-you for joining me through the steps of Reinforcement Learning, and I hope you found some of the power of RL enlightening. There might be one more blog to come from me on Reinforcement Learning discussing the current Endgame of RL research, although that will be much shorter a post if I decide to discuss it. Thank-you for your time and all the best,

– Jordan J Hood