Kelsey Atemie-Hart

Sign Language is the method of communication used by people with hearing and speaking disabilities. The sign languages used in a certain country will be different from that used in another country. The individuals without this disability will find it difficult to communicate with the deaf and dumb, leading to a communication gap between these people. There are technologies that are developed to aid the learning of sign language through the implementation of deep learning techniques in machine learning to develop a Sign Language Recognition (SLR) system. A SLR system is a system that is used with the aid of machine learning to convert sign gestures performed by individuals to readable human text. There are two approaches in deep learning that used; sensor-based and vision-based approach. These two approaches differ in computation and structure. This researcher paper will analyze these two approaches, how they are implemented, the limitations in these approaches and from the analysis determine the best approach to adopt when developing a SLR system. The best approach to adopt will be determined by its output recognition accuracy, ease to use by end-users and the computational complexity when building it.

Keywords: communication, sign language recognition, analyze, accuracy.

Kelsey Atemie-Hart

SIGN LANGUAGE RECOGNITION APPROACHES IN DEEP LEARNING

INTRODUCTION

- Sign Language is a form of communication for the disabled with hearing and speaking disability.

- There are technologies that are developed to aid these communication method.

- The implementation of deep learning is a very useful approach to develop Sign Language Recognition (SLR) systems.

- There are currently two approaches, namely Sensor-based approach and Vision-based approach that are used to develop a SLR system in deep learning.

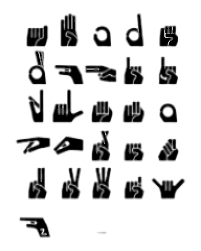

American Sign Language

MOTIVATION

- The purpose of this research is to identify the approaches used under machine learning to develop a SLR system and to identify the weaknesses with these current approaches and provide a solution.

- Solution to accuracy and efficiency.

- To improves the SLR system for the disabled, making communication with other individuals easier.

PROBLEM

- The problem is bridging the communication gap between the hearing and speech impaired and the other individuals. The implementation of machine learning to aid SLR should be able to better the communication between individuals at a cheaper cost than employing a translator, effectively and accurately.

DEEP LEARNING APPROACHES FOR SLR SYSTEMS

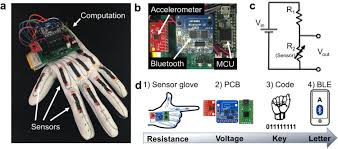

- SENSOR-BASED APPROACH

- This approach brings out the hand measurements, such as the hand positions and the orientation of the joints. It makes use of sensor gloves, digital camera, depth camera, accelerometer, leap motion controller, etc.

Smart Glove

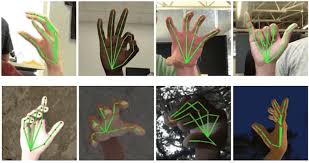

- VISION-BASED APPROACH

- The vision based approach is the use of cameras to capture the hand gesture done by the individual without the use of gloves or any external tool.

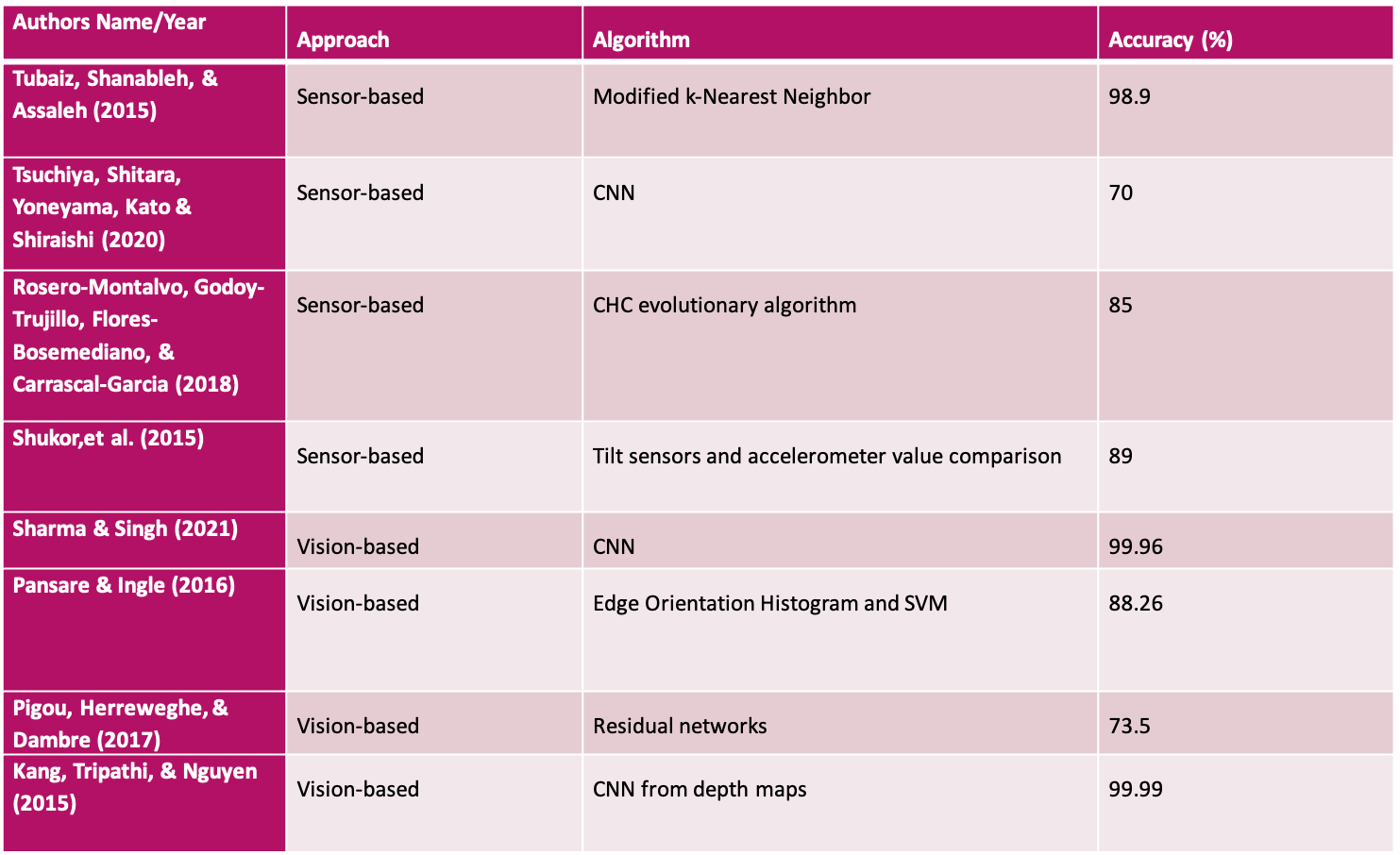

COMPARISON OF BOTH APPROACHES

- Vision based approach:

- Makes use of just camera to capture gesture making it more convenient

- It has little to no building cost

- It is more portable for end users to make use of

- It has more computational cost due to the process taken to extract gesture from image

- According to table below has more accuracy level from works done

- Sensor based approach:

- It is not so convenient to use cause of the need to wear sensor gloves.

- It has less computation cost due to the sensors within the gloves been able to directly report relevant data

- It has more building cost due to gloves, accelerometers, microcontrollers, flex sensors etc.

- It is not so portable to use.

ACCURACY COMPARISON OF BOTH APPROACHES

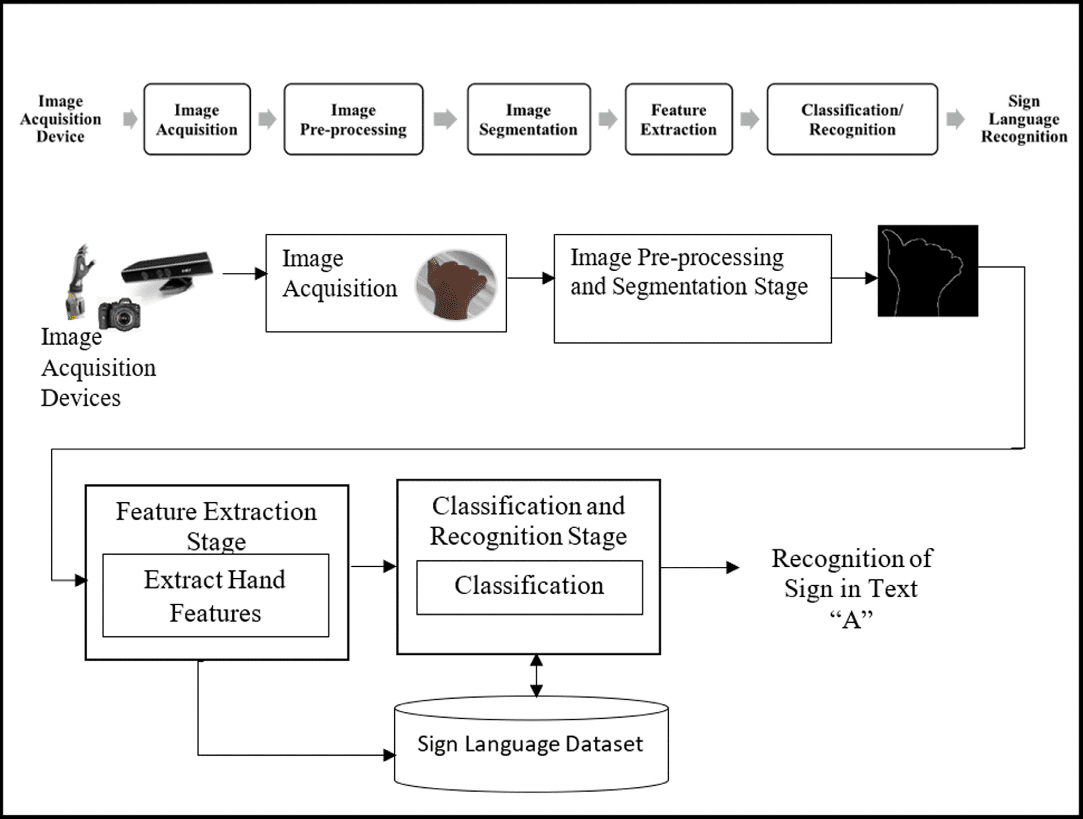

VISION BASED APPROACH METHOD

BEST APPROACH TO ADOPT

The gestures captured depict the fingers, palms, and face in terms of posture, location, and motion .

It is necessary to perform image processing in order to separate the signers' hands from the background items. However, picture noises from a variety of sources (camera, light, color matching, and backdrop) are associated with the extraction of the user's facial and body actions, limiting dynamic image recognition. Despite this, error filters are utilized to repair the harm.

This approach also makes use of classification algorithms and methods in deep learning that increases its efficiency such as the Hidden Markov Model (HMM) and Neural Networks.

In addition, this system can be easily used by individuals anywhere and anytime, it is more scalable, portable and efficient

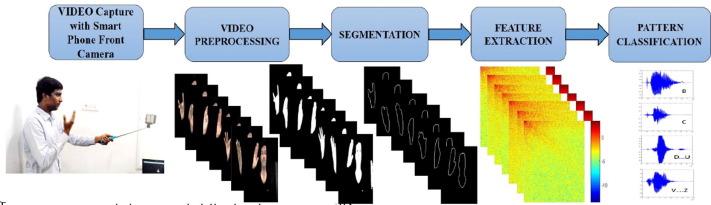

IMAGE ACQUISITION:

This is actually the first step in recognition. The built-in desktop/laptop web camera will be used to capture the sign gestures carried out by the user. This camera needs to be in high definition so as to capture quality imagery of the hand gestures and increase accuracy of interpretation.

IMAGE PREPROCESSING/SEGMENTATION

This involves the cropping, filtering, the brightness and contrast adjustment, etc. of the image taken. To carry out the task, image segmentation method is used alongside image cropping and enhancement. The images captured are in RGB form, which are then converted to binary images. Cropping of the image is done so that the unwanted part of the image is extracted. The selected area will undergo image enhancement. Edge detection is another method that is done in image segmentation, it is the detection of the boundary of the cropped areas of the image used later for feature extraction. With the use of OpenCV, the images used are revised to the same size to eliminate differences in images of different gestures.

FEATURE EXTRACTION

This is useful to create a database of sign recognition. For letter image similarity characterization, both local and global visual features are recovered to quickly and effectively characterize the varied visual principles of letters in the alphabet. The two methods of feature extraction include; Contour-based shape representation and description method and the other is the Region-based shape representation and description method.

CLASSIFICATION

Image classification is a supervised learning problem in which a model is trained to recognize a collection of target classes (things to identify in images) using labeled sample photos. Raw pixel data was used as the input to early computer vision models.

RECOMMENDATION

REFERENCES

- Shukor, A. Z., Miskon, M. F., Jamaluddin, M. H., Ali@Ibrahim, F., Asyraf, M., & Bahar, M. (2015). A New Data Glove Approach for Malaysian Sign Language Detection. 2015 IEEE International Symposium on Robotics and Intelligent Sensors (IRIS 2015), (pp. 60-67).

- Sutarman, Majid, M. B., & Zain, J. N. (2013). Vision-Based Sign Language Recognition Systems: A Review. Proceedings of the 2013 International Conference on Computer Science and Information Technology (CSIT-2013), (pp. 195-200).

- Tsuchiya, T., Shitara, A., Yoneyama, F., Kato, N., & Shiraishi, Y. (2020). ensor Glove Approach for Japanese Fingerspelling Recognition System Using Convolutional Neural Networks. ACHI 2020 : The Thirteenth International Conference on Advances in Computer-Human Intera, (pp. 34-39).

- Tubaiz, N., Shanableh, T., & Assaleh, K. (2015, August). Glove-Based Continuous Arabic Sign Language Recognition in User-Dependent Mode. IEEE Transactions on Human-Machhine Systems, 45(4), 526-533.

- Wehle, H.-D. (2017). Machine Learning, Deep Learning, and AI: What’s the Difference?

- Lokhande, P., Prajapati, R., & Pansare, S. (2015). Data Gloves for Sign Language Recognition System. International Journal of Computer Applications, 11-14.

- Mehdi, S. A., & Khan, Y. N. (2002). Sign Language Recognition using Sensor Gloves. z C, Leu MC (2011) American Sign Language word recogni- tion with a sensory glove using artificial neural networks. Eng Appl Artif Intell 24(7):1204–1 (pp. 2204-2206). IEEE.

- Pansare, J. R., & Ingle, M. (2016). Vision-Based Approach for American Sign Language Recognition Using Edge Orientation Histogram. 2016 International Confrence on Image, Vision and Computing (pp. 86-90). IEEE.

- Pigou, L., Herreweghe, M. V., & Dambre, J. (2017). Gesture and Sign Language Recognition with Temporal Residual Networks. 2017 IEEE International Conference on Computer Vision Workshops (ICCVW) (pp. 3086-3093). IEEE.

- Rosero-Montalvo, P. D., Godoy-Trujillo, P., Flores-Bosmediano, E., & Carrascal-García, J. (2018). Sign Language Recognition Based on Intelligent Glove Using Machine Learning Techniques. 2018 IEEE Third Ecuador Technical Chapters Meeting (ETCM), (pp. 1-5).

- Sharma, S., & Singh, S. (2021). Vision-based hand gesture recognition using deep learning for the interpretation of sign language. Expert Systems with Applications

- Ahmed, M. A., Zaidan, B. B., Zaidan, A. A., Salih, M. M., & Modi bin Lakulu, M. (2018). A Review on Systems-Based Sensory Gloves for Sign Language Recognition State of the Art between 2007 and 2017. 18(7).

- Al-Ahdal, M. E., & Tahir, N. M. (2012). Review in Sign Language Recognition Systems. 2012 IEEE Symposium on Computers & Informatics(ISCI), (pp. 52-57).

- Albawi, S., Mohammed, T. A., & Al-Zawi, S. (2017). Understanding of a Convolutional Neural Network. 2017 International Conference on Engineering and Technology (ICET) . Antalya, Turkey: IEEE.

- Cheok, M. j., Omar, Z., & Jaward, M. (2019). A review of hand gesture and sign language recognition techniques. International Journal of Machine Learning and Cubernetics, 10, 131-153.

- Kang, B., Tripathi, S., & Nguyen, T. Q. (2015). Real-time Sign Language Fingerspelling Recognition using Convolutional Neural Networks from Depth map.

- Lei, L., & Dashun, Q. (2015). Design of data-glove and chinese sign language recognition system based on ARM9. 2015 IEEE 12th International Conference on Electronic Measurement & Instruments, (pp. 1130-1134).

Slide 1 image (max 2mb)

Slide 1 video (YouTube/Vimeo embed code)

Image 1 Caption

Slide 2 image (max 2mb)

Slide 2 video (YouTube/Vimeo embed code)

Image 2 Caption

Slide 3 image (max 2mb)

Slide 3 video (YouTube/Vimeo embed code)

Image 3 Caption

Slide 4 image (max 2mb)

Slide 4 video (YouTube/Vimeo embed code)

Image 4 Caption

Slide 5 image (max 2mb)

Slide 5 video (YouTube/Vimeo embed code)

Image 5 Caption

Slide 6 image (max 2mb)

Slide 6 video (YouTube/Vimeo embed code)

Image 6 Caption

Slide 7 image (max 2mb)

Slide 7 video (YouTube/Vimeo embed code)

Image 7 Caption

Slide 8 image (max 2mb)

Slide 8 video (YouTube/Vimeo embed code)

Image 8 Caption

Slide 9 image (max 2mb)

Slide 9 video (YouTube/Vimeo embed code)

Image 9 Caption

Slide 10 image (max 2mb)

Slide 20 video (YouTube/Vimeo embed code)

Image 10 Caption

Caption font

Text

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)

Image (max size: 2mb)

Or drag a symbol into the upload area

Image description/alt-tag

Image caption

Image link

Rollover Image (max size: 2mb)

Or drag a symbol into the upload area

Border colour

Rotate

Skew (x-axis)

Skew (y-axis)