Style control - access keys in brackets

Relative Likelihood intervals

The ratio between two likelihood values is useful to look at for other reasons.

Definition.

Suppose we have data , that arise from a population with likelihood function , with MLE . Then the relative likelihood of the parameter is

The relative likelihood quantifies how likely different values of are relative to the maximum likelihood estimate.

Using this definition, we can construct relative likelihood intervals which are similar to confidence intervals.

Definition.

A p% relative likelihood interval for is defined as the set

TheoremExample 12.4.2 Illegal downloads (cont.)

For example a 50% relative likelihood interval for in our example would be

By plugging in different values of , we see that the relative likelihood interval is . The values in the interval can be seen in the figure below.

TheoremExample 12.4.3 Sequential sampling with replacement: Smarties colours

Suppose we are interested in estimating , the number of distinct colours of Smarties.

In order to estimate , suppose members of the class make a number of draws and record the colour.

Suppose that the data collected (seven draws) were:

purple, blue, brown, blue, brown, purple, brown.

We record whether we had a new colour or repeat:

New, New, New, Repeat, Repeat, Repeat, Repeat.

Let denote the number of unique colours. Then the likelihood function for given the above data is:

If in a second experiment, we observed:

New, New, New, Repeat, New, Repeat, New,

then the likelihood would be:

The MLEs in each case are and .

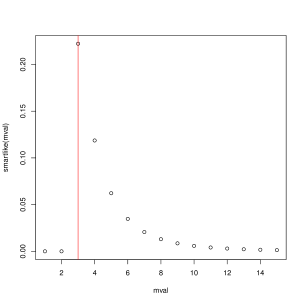

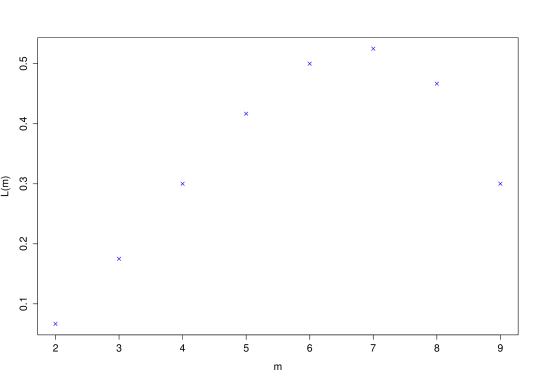

The plots below show the respective likelihoods.

|

|

R code for plotting these likelihoods:

TheoremExample 12.4.4 Brexit opinions

Three randomly selected members of a class of 10 students are canvassed for their opinion on Brexit. Two are in favour of staying in Europe. What can one infer about the overall class opinion?

The parameter in this model is the number of pro-Remain students in the class, , say. It is discrete, and could take values . The actual true unknown value of is designated by .

Now is

Now since the likelihood function of is the probability (or density) of the observed data for given values of , we have

for .

This function is not continuous (because the parameter is discrete). It can be maximised but not by differentiation.

The maximum likelihood estimate is Note that the points are not joined up in this plot. This is to emphasize the discrete nature of the parameter of interest.

The probability model is an instance of the hypergeometric distribution.