Style control - access keys in brackets

12.3 Likelihood Examples: continuous parameters

We now explore examples of likelihood inference for some common models.

TheoremExample 12.3.1 Accident and Emergency

Accident and emergency departments are hard to manage because patients arrive at random (they are unscheduled). Some patients may need to be seen urgently.

Excess staff (doctors and nurses) must be avoided because this wastes NHS money; however, A&E departments also have to adhere to performance targets (e.g. patients dealt with within four hours). So staff levels need to be ‘balanced’ so that there are sufficient staff to meet targets but not too many so that money is not wasted.

A first step in achieving this is to study data on patient arrival times. It is proposed that we model the time between patient arrivals as iid realisations from an Exponential distribution.

Why is this a suitable model?

What assumptions are being made?

Are these assumptions reasonable?

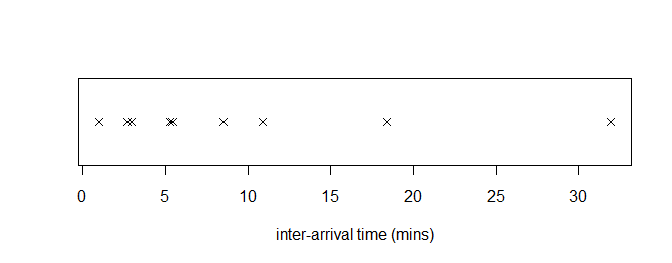

Suppose I stand outside Lancaster Royal Infirmary A&E and record the following inter-arrival times of patients (in minutes):

18.39, 2.70, 5.42, 0.99, 5.42, 31.97, 2.96, 5.28, 8.51, 10.90.

As usual, the first thing we do is look at the data!

The exponential pdf is given by

for , . Assuming that the data are iid, the definition of the likelihood function for gives us, for general data ,

Note: we usually drop the ‘’ from whenever possible.

Usually, when we have products and the parameter is continuous, the best way to find the MLE is to find the log-likelihood and differentiate.

So the log-likelihood is

Now we differentiate:

Now solutions to are potential MLEs.

-

1

-

2

To ensure this is a maximum we check the second derivative is negative:

So the solution we have found is the MLE, and plugging in our data we find (via 1/mean(arrive))

Now that we have our MLE, we should check that the assumed model seems reasonable. Here, we will use a QQ-plot.

Given the small dataset, this seems ok – there is no obvious evidence of deviation from the exponential model.

Knowing that the exponential distribution is reasonable, and having an estimate for its rate, is useful to calculate staff scheduling requirements in the A&E.

Extensions of the idea consider flows of patients through the various services (take Math332 Stochastic Processes and/or the STOR-i MRes for more on this).

TheoremExample 12.3.2 Is human body temperature really 98.6 degrees Fahrenheit?

In an article by Mackowiak et al.33Mackowiak P.A., Wasserman, S.S. and Levine, M.M. (1992) A Critical Appraisal of 98.6 Degrees F, the Upper Limit of the Normal Body Temperature and Other Legacies of Carl Reinhold August Wunderlich, the authors measure the body temperatures of a number of individuals to assess whether true mean body temperature is 98.6 degrees Fahrenheit or not. A dataset of individuals is available in the normtemp dataset. The data are assumed to be normally distributed with standard deviation .

Why is this a suitable model?

What assumptions are being made?

Are these assumptions reasonable?

What do the data look like?

The plot can be produced using

The histogram of the data is reasonable, but there might be some skew in the data (right tail).

The normal pdf is given by

where in this case, is known.

The likelihood is then

Since the parameter of interest (in this case ) is continuous, we can differentiate the log-likelihood to find the MLE:

and so

For candidate MLEs we set this to zero and solve, i.e.

and so the MLE is .

This is also the “obvious” estimate (the sample mean). To check it is indeed an MLE, the second derivative of the log-likelihood is

which confirms this is the case.

Using the data, we find .

This might indicate evidence for the body temperature being different from the assumed 98.6 degrees Fahrenheit.

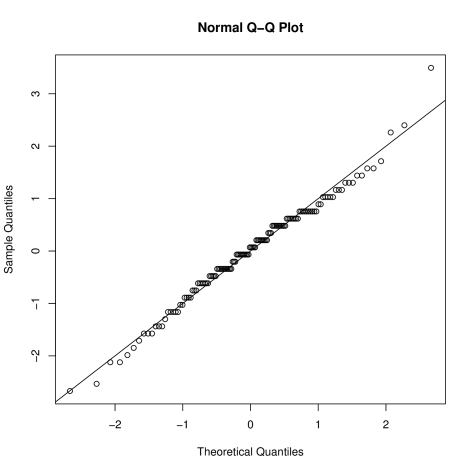

We now check the fit:

The fit looks good – although (as the histogram previously showed) there is possibly some mild right (positive) skew, indicated by the quantile points above the line.

Why might the QQ-plot show the “stepped” behaviour of the points?

TheoremExample 12.3.3

Every day I cycle to Lancaster University, and have to pass through the traffic lights at the crossroads by Booths (heading south down Scotforth Road). I am either stopped or not stopped by the traffic lights. Over a period of a term, I cycle in 50 times. Suppose that the time I arrive at the traffic lights is independent of the traffic light sequence.

On 36 of the 50 days, the lights are on green and I can cycle straight through. Let be the probability that the lights are on green. Write down the likelihood and log-likelihood of , and hence calculate its MLE.

With the usual iid assumption we see that, if is the number of times the lights are on green then . So we have

We therefore have, for general and ,

and

Solutions to are potential MLEs:

and if we have

For this to be an MLE it must have negative second derivative.

In particular we have and so is the MLE.

Now suppose that over a two week period, on the 14 occasions I get stopped by the traffic lights (they are on red) my waiting times are given by (in seconds)

Assume that the traffic lights remain on red for a fixed amount of time , regardless of the traffic conditions.

Given the above data, write down the likelihood of , and sketch it. What is the MLE of ?

We are going to assume that these waiting times are drawn independently from , where is the parameter we wish to estimate.

Why is this a suitable model?

What assumptions are being made?

Are these assumptions reasonable?

Constructing the likelihood for this example is slightly different from those we have seen before. The pdf of the distribution is

The unusual thing here is that the data enters only through the boundary conditions on the pdf. Another way to write the above pdf is

where is the indicator function.

For data , the likelihood function is then

We can write this as

For our case we have and , so .

We are next asked to sketch this MLE. In R,

From the plot it is clear that , since this is the value that leads to the maximum likelihood. Notice that solving would not work in this case, since the likelihood is not differentiable at the MLE.

However, on the feasible range of , i.e. , we have

and so

Remember that derivatives express the rate of change of a function. Hence since the (log-)likelihood is negative ( is strictly positive), then the likelihood is decreasing on the feasible range of parameter values.

Since we are trying to maximise the likelihood, this means we should take the minimum over the feasible range as the MLE. The minimum value on the range is .